ETL and its processes explained

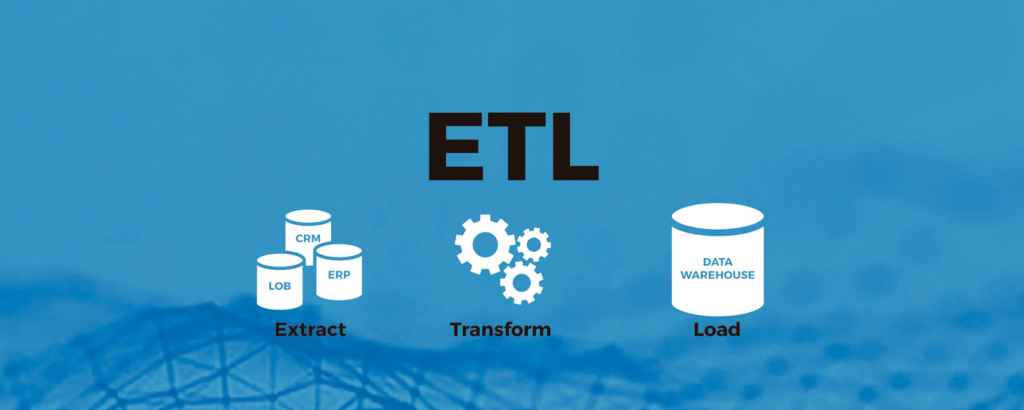

Extract, transform, and load (ETL) is the process of integrating data from multiple sources and bringing it together into one central location. It is paramount for every business to utilize this technology effectively to harness the power that lives in the data accumulated across the organization and beyond.

The functionality of ETL is pretty straightforward.

It is a trinity of processes, and in a broad sense, it is the idea of transporting data from one source and making it accessible to another. The modern application of ETL sometimes requires an extra step. With the addition of the “cleaning” phase, it morphs into ECTL or Extract-Clean-Transform-Load. It is considered the core of the data integration mechanism, and the entire business intelligence ecosystem depends on it.

Processes explained

Extract

Extraction refers to retrieving data from a source (or many sources) and transporting it to staging to prepare it for processing. The extract stage’s primary goal is to retrieve data from the source system without consuming too many resources, which can negatively affect performance.

Data extraction commonly has three main types: (1) Update notification, (2) Incremental extraction, and (3) Full extraction. Update notification is the most straightforward way to extract data from a source system. The system alerts data managers when a value or record has been modified, akin to your smartphone notifications. Incremental extraction can be utilized when a source system cannot notify when an update has occurred but can identify the changes. Full extraction can be employed when a source system cannot send notifications nor identify the changes that have occurred. In this case, reloading, replicating, or fully extracting the data can be the only option.

Transform

Extracted data is often raw and cannot be processed immediately. The transformation phase is one of the most critical steps, as it ensures the quality and consistency of the extracted data. Some industry specialists reiterate that a “cleaning” stage should be included in the process (see ECTL), but the generally accepted framework includes the cleaning subphase in the transformation stage. The transformation step requires joining data from several sources, generating aggregates, generating surrogate keys, sorting, deriving new calculated values, applying advanced validation rules such as data flow validation, and transposing rows and columns, among others.

Load

The last process involves loading the extracted data into the target database. The loading process is about performance optimization as a large volume of data needs to be loaded efficiently and in a relatively short time (commonly referred to as “nights“). Accurately loading the data is very important in this stage, as failure or bottlenecks can result in massive data integrity loss. Rollback or recall can also be challenging and demand huge resource utilization.

There are two main ways to load the data into the target system. Incremental loading is the most common type of loading. It generally translates to the periodic uploading of data in increments or schedules. Full loading or full refresh is rarely performed as it entails deleting all extracted data and transforming it into new data sets. This type of loading also requires a lot of time and resources.

ETL as the core of data integration

ETL, although considered “old” in a vast sea of new technology surrounding data management, is “clearly” and “still” very important. It can greatly benefit any organization looking to utilize data intelligence and insights to further its business positioning.

Related Product

TeraStream, an ETL tool from Korea’s No. 1 data management company DataStreams, is a high-performance enterprise data integration solution that can extract, transform, and load data of various formats.

It allows you to process large volumes of data in a user-friendly way with its drag-and-drop GUI environment.